Prototype to polish: Making games in CHICKEN Scheme with Hypergiant

Introduction

Hypergiant is a library for CHICKEN Scheme that tries to make it easy to make efficient games.1 The idea behind it is the belief that games should start with a prototype that should take minimal effort, but these should be extensible to be whatever you want the final product to be. So Hypergiant attempts to find the sweet spot between ease of use and power. What Hypergiant doesn’t do is try to provide the same kind of rich tool-set as the big game engines – it’s just a library. It fits in between existing primitive-based libraries such as Allegro and SFML or 2D/prototyping oriented frameworks such as LÖVE and full engines such as Unity – providing more advanced abstractions than the former and fewer features, but greater flexibility, than the latter.

Hypergiant is based on OpenGL, and encourages its use when needed. While you’ll be able to perform basic operations in Hypergiant without any knowledge of OpenGL, moving to the advanced features isn’t possible without understanding at least the fundamentals.2 If you know OpenGL, though, Hypergiant gives you tools to perform many advanced tasks in in an intuitive way, without sacrificing any speed. But Hypergiant is more than just OpenGL. It’s also:

- Window management

- Intuitive input processing

- Scene management

- Geometry creation and loading

- Texture loading

- Animated sprites

- Text rendering

- Procedural noise

- Math

- Cross-platform: Runs on anything that supports OpenGL

While this is a fairly typical feature list, Hypergiant has some special features that set it apart. For starters, working with OpenGL shaders and geometry is super easy. There’s no working with strings and zero boilerplate required. When you write a shader, you do it in a Scheme-like language, and rendering functions for that shader are automatically compiled to C. When these functions are combined with Hypergiant’s scene rendering system, the resulting rendering phase happens in pure C. Extending Hypergiant’s rendering capabilities is therefore easy, enjoyable, but compiles down to code that resembles hand-coded C. And this is, of course, combined with the inherent extensibility of Scheme, giving you freedom in working with graphical applications that is hard to beat.

This tutorial will walk through the fundamentals of Hypergiant while creating the prototype for a game. Then we’ll add a layer of polish onto that prototype, illustrating some of the extensibility of Hypergiant. An understanding of Scheme3 is a prerequisite for the tutorial, while a knowledge of OpenGL is an asset, but not strictly necessary.

If you’re wondering why Hypergiant is written for CHICKEN Scheme, take a look at appendix A. Appendix B provides some instructions for installing CHICKEN and Hypergiant, while appendix C offers some resources on OpenGL.

The prototype

Getting started

We’re going to make the game of Go, an ancient game of strategy with deceptively simple rules. Physically, Go has only two components: a playing board with a grid marked on it, and black and white markers called “stones”. For those familiar with Go, we’ll use the area scoring rules for this example.

To start out, we need to open a window. Hypergiant uses the start function to create a window and run the main loop. So our first simple Hypergiant program looks like this:

(import chicken scheme)

(use hypergiant)

(start 800 600 "Go" resizable: #f)This loads the CHICKEN core and Hypergiant, then calls start to create a 800 by 600 pixel window with the title “Go”. We are also specifying that the window should be a fixed width (i.e. non-resizable).

I’ve put this in a file called go.scm and it can be run with CHICKEN’s interpreter – csi go.scm – or compiled to an executable – csc go.scm.

Since I like to be able to close my prototypes with a single key press, we’ll add a key binding to our program:

(import chicken scheme)

(use hypergiant)

(define keys (make-bindings

`((quit ,+key-escape+ press: ,stop))))

(define (init)

(push-key-bindings keys))

(start 800 600 "Go" resizable: #f init: init)This defines a variable, keys which is a list of key bindings. make-bindings takes a list of (ID KEY [ACTION]) lists. In this case the action for the escape key is to call the Hypergiant function stop when it is pressed. A new function init is also created. In it, our new variable keys is pushed onto the key bindings stack, making it the active set of key bindings. init is then passed to start as the argument to init:, which is called before the main loop starts.

If you run this example you’ll get a black 800 by 600 window that you can hit escape to exit.

Scenes, nodes and cameras

If we’re going to render anything, we need a scene to put things in. A scene is a shared space where objects “exist” (i.e. they have a position and orientation within the scene). The objects in a scene are called nodes. While some nodes may be invisible, for the most part nodes are put in scenes so that they can be drawn (a.k.a. rendered) onto the screen. In order to actually render the scene, you first need a camera. Each camera also has a position and orientation within a scene, and additionally has a particular projection. The two projections that Hypergiant supports are orthographic which is suitable for a 2D style game and perspective, which is used to give three dimensional objects depth.

So to start we’ll create some parameters with which to hold a camera and a scene, and we’ll initialize them in the init function.

(define scene (make-parameter #f))

(define camera (make-parameter #f))

(define (init)

(push-key-bindings keys)

(scene (make-scene))

(camera (make-camera #:perspective #:position (scene)

near: 0.001 angle: 35)))Our camera is given a perspective projection with a near plane at 0.001 and a view angle of 35°. The camera is a #:position camera, which means that its position in the scene is set in the same way as nodes: by specifying a position and a rotation quaternion. Other types of camera positioning systems are provided, such as orbital cameras – which orbit around a particular point, look at cameras, and first person cameras.

When we run this, we still don’t see anything in the window. That’s because we haven’t added any nodes yet. We’ll do this in the next section.

Meshes and render-pipelines

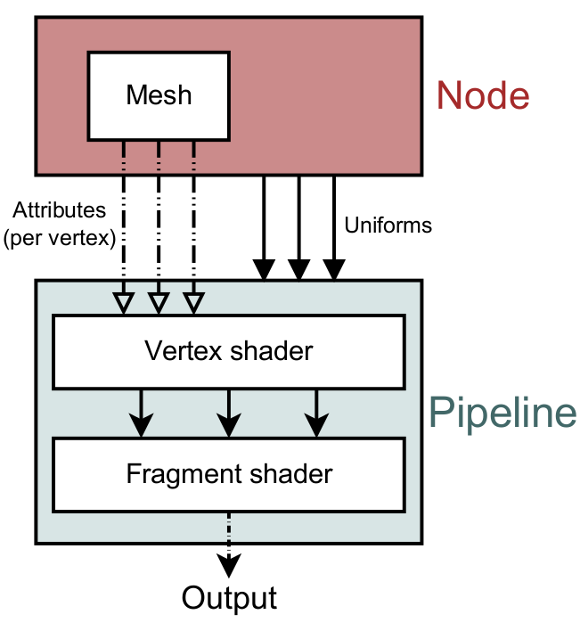

Now we’re going to add a Go board to the scene. Boards in Go are typically made of wood, and have a grid painted on. Our board in the game will be a rectangle that spans from (0 0) to (1 1) in our scene (plus a bit of extra space around the edge). In order to do this, we need to understand two more concepts Hypergiant uses frequently for rendering. Meshes are the what of what you are rendering while render-pipelines are the how. In other words, meshes are your geometry – which for a 2D game is often just a rectangle – with added data associated with each vertex, such as texture coordinates, colours, or normals (the direction perpendicular to the vertex). Each type of data associated with a vertex is called an attribute. Render-pipelines, on the other hand, instruct Hypergiant on how the geometry is to be rendered. The same geometry can end up looking completely different depending on how it’s rendered – think about how the same model would look if it was rendered with traditional 3D graphics versus cell-shading.

Hyperscene uses the add-node function to create a new node in the scene. This function takes a mesh and a render-pipeline.

(import chicken scheme)

(use hypergiant)

(define keys (make-bindings

`((quit ,+key-escape+ press: ,stop))))

(define scene (make-parameter #f))

(define camera (make-parameter #f))

(define board-mesh (rectangle-mesh 1.2 1.2

color: (lambda (_)

'(0.5 0.4 0.2))))

(define (init-board)

(add-node (scene) color-pipeline-render-pipeline

mesh: board-mesh

position: (make-point 0.5 0.5 0)))

(define (init)

(push-key-bindings keys)

(scene (make-scene))

(camera (make-camera #:perspective #:position (scene)

near: 0.001 angle: 35))

(init-board))

(start 800 600 "Go" resizable: #f init: init)Here we’ve updated the example to include a new variable, board-mesh, in which we created a rectangle mesh with a width and length of 1.2. This rectangle has a colour associated with each vertex since we’re passing a function that returns a colour to the color keyword. In this case we are having our colour function return the same colour at every point.

We’ve also created a new function init-board and added it to init. Right now init-board simply adds the board-mesh to the scene that we’ve created. We set the position of the board to be at (0.5 0.5 0). This moves the centre of the rectangle to that point, which allows the rectangle to cover the space from (0 0) to (1 1) like we wanted.

We’ve passed color-pipeline-render-pipeline as the second argument of add-node, which expects a render-pipeline. This particular render-pipeline is used to render geometry that has a colour associated with each position. Hypergiant has a few built in render-pipelines, but we’ll be talking about how you can create your own later on. Basically, you need to make sure that every vertex of your mesh has the attribute data that a pipeline expects, and that when you add a node you need to pass along pointers to any uniform data – data that is shared for the entire mesh – that the pipeline expects (except for uniforms that add-node passes automatically).

If we run this, though, we still don’t see anything. So what’s going on? Well, the camera defaults to the origin, looking in the negative Z direction, while our rectangle is sitting at (0.5 0.5 0). In other words, our rectangle is occupying the same plane that our camera is. Since, just like in real life, the camera needs to be pointed at what we’re looking at (and not overlapping it), we need to move our camera.

(define (init)

(push-key-bindings keys)

(scene (make-scene))

(camera (make-camera #:perspective #:position (scene)

near: 0.001 angle: 35))

(set-camera-position! (camera) (make-point 0.5 0.5 2))

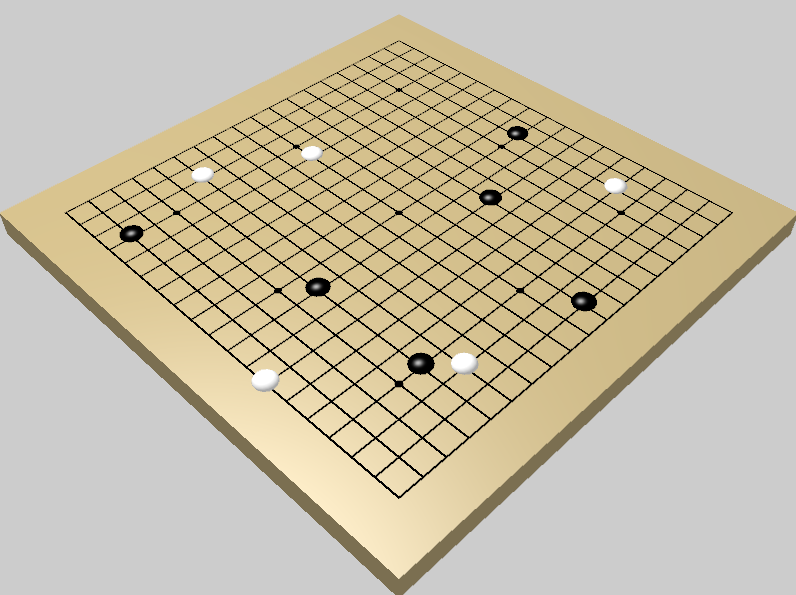

(init-board))So we modify our init function to set the camera’s position, moving it back (positive along the Z axis) and centred on the board. Now when we run the example, we can see our board:

This isn’t really close to what a Go board should look like though: we need a grid so that we know where to place the stones. Further, that grid normally has markers on it, to make judging distances easier. To accomplish this, we’ll make a new mesh that combines the grid with the markers, that we’ll draw over the board.

A grid is made out of lines, but if we want our lines to have any thickness, they’ll actually have to be rectangles. We already know how to make rectangles, so let’s define one:

(define line-width (/ 256))

(define grid-line (rectangle-mesh (+ 1 line-width) line-width

centered?: #f))We now have a single mesh representing one line with a length of 1 (the playing area length) plus line-width (so that the ends join nicely), and a width of line-width. The origin is not the centre of this rectangle (it was for the last one), but rather is at the bottom right corner.

So how can we turn this line into a grid? Hypergiant has a function that takes a list of (MESH . TRANSFORM-MATRIX) pairs, and returns a mesh that combines all of the given meshes with the positions specified by the matrices. So all we need is a function that returns these pairs:

(define grid-rows 19)

(define (build-grid)

(let* ((-line-width/2 (- (/ line-width 2)))

(line-spacing (/ (sub1 grid-rows)))

(lateral-lines

(let loop ((i 0) (lines '()))

(if (= i grid-rows)

lines

(loop (add1 i)

(cons

(cons grid-line

(translation

(make-point -line-width/2

(+ (* i line-spacing)

-line-width/2)

0)))

lines)))))

(vertical-lines

(map (lambda (a)

(cons grid-line

(translate (make-point 0 1 0)

(rotate-z (- pi/2)

(copy-mat4 (cdr a))))))

lateral-lines)))

(append lateral-lines

vertical-lines)))Here we’re making 19 lateral lines and 19 vertical lines, and pairing them with matrices that arrange them into a grid. The vertical-lines are just a copy of the lateral-lines matrix, rotated and translated. While there’s a bit of fiddling need to get the lines into the right place, this should look fairly straight forward.

We mentioned earlier that we also want our grid to have markers. These should be circles in a 3x3 grid, spaced six grid-nodes apart, such that the outer circles are three nodes separated from the edge.

(define marker (circle-mesh (/ 120) 12))

(define (build-markers)

(let* ((3nodes (/ 3 (sub1 grid-rows)))

(15nodes (/ 15 (sub1 grid-rows)))

(marker-points `((,3nodes . ,3nodes)

(,3nodes . 0.5)

(,3nodes . ,15nodes)

(0.5 . ,3nodes)

(0.5 . 0.5)

(0.5 . ,15nodes)

(,15nodes . ,3nodes)

(,15nodes . 0.5)

(,15nodes . ,15nodes))))

(map (lambda (p)

(cons marker

(translation (make-point (car p) (cdr p) 0))))

marker-points)))This is even more straight-forward than build-grid, but here we use a circle mesh with radius 1/120 and with 12 segments to it (since circles are really constructed out of triangles).

Now we combine them together with the function we had mentioned earlier:

(define board-grid-mesh (mesh-transform-append

'position

(append (build-grid)

(build-markers))))The 'position is there in order to tell mesh-transform-append which attribute of the meshes it should be transforming. All of Hypergiant’s mesh creation functions use an attribute named position to denote a vertex’s position (appropriately enough). Now all we need to do is modify init-board:

(define (init-board)

(add-node (scene) color-pipeline-render-pipeline

mesh: board-mesh

position: (make-point 0.5 0.5 0))

(add-node (scene) mesh-pipeline-render-pipeline

mesh: board-grid-mesh

color: black

position: (make-point 0 0 0.0003)))We’re adding our new mesh, this time with the mesh-pipeline-render-pipeline. This pipeline uses meshes that have only a position attribute, but it takes a color: argument that it uses to colour the mesh, which we’re choosing to make black. The mesh is also very slightly forward on the Z axis, compared to the board-mesh. This is so that the grid and markers appear in front of the board rectangle.

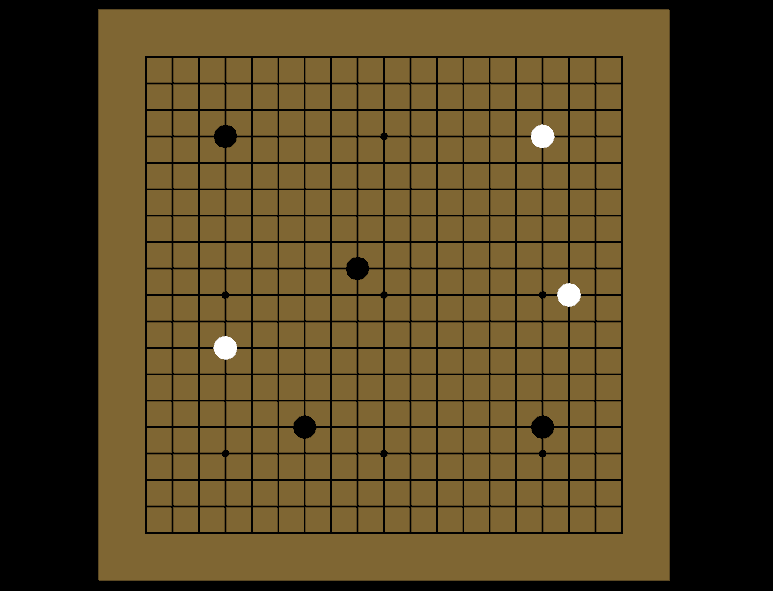

We now have something that looks like a Go board!

More input and the finished prototype

Now that we have a board, we need some way of adding stones to the board. Every turn consists of a stone being placed on one of the intersections of the grid, first a black stone then alternating white and back. But we also need some way of keeping track of what stones are where. So first we’ll set up some state for the game. We’ll use a record to store the data of each of the nodes on the board. Three things will be tracked for each node: the index of the node (an (x . y) pair corresponding to the position on the grid rows and columns), the colour of the stone on the node (if any), and the scene node (the object returned by add-node) associated with the stone on the node (if any). We then set up the game state: a two dimensional array of nodes (using list comprehensions from srfi-42).

(use srfi-42)

(define-record node

index color scene-node)

(define game-state

(make-parameter

(list-ec (: i grid-rows)

(: j grid-rows)

(make-node (cons j i) #f #f))))Now we’ll create the function that adds a stone to the scene:

(define stone-radius (/ 40))

(define stone-mesh (circle-mesh stone-radius 12))

(define colors `((white . ,white)

(black . ,black)))

(define (add-stone-to-scene node index)

(let ((n (add-node (scene) mesh-pipeline-render-pipeline

mesh: stone-mesh

color: (alist-ref (node-color node) colors)

position: (make-point (/ (car index)

(sub1 grid-rows))

(/ (cdr index)

(sub1 grid-rows))

0.0006)

radius: stone-radius)))

(node-scene-node-set! node n)))This is pretty similar to code we’ve already seen. We’re again using the mesh-pipeline-render-pipeline, choosing the stone’s color value from the colors alist, and setting the position based on the node’s index. This goes to show that we can render the same mesh in multiple locations, with different appearances, by varying the uniform values. We also set the radius value of the stone, which tells the scene manager how big the bounding sphere around a node actually is. This won’t have any effect in this example, since by default the bounding sphere has a radius of 1, and the camera never moves outside of this sphere, but it’s worth knowing about.

Now that we can add a stone to the scene, how are we actually going to do it? The obvious solution is that, when we click on the window, we are telling the game to add a stone in that position. The trick is, how do we translate window coordinates into scene coordinates? We’ll do this using get-cursor-world-position:

(define (get-cursor-board-position)

(receive (near far) (get-cursor-world-position (camera))

(let ((u (/ (point-z near) (- (point-z near) (point-z far)))))

(make-point (+ (point-x near) (* u (- (point-x far)

(point-x near))))

(+ (point-y near) (* u (- (point-y far)

(point-y near))))

0))))get-cursor-world-position, called with the camera that’s rendering the scene we’re interested in, returns two values, the point in the scene corresponding to the cursor position as projected against the near plane of the camera, and the cursor position as projected against the far plane of the camera. get-cursor-board-position is interpolating between these two points (near and far) and returning the point on this line that corresponds with the Z = 0 plane. Since the game board is aligned with this plane, this will tell us where on the game board we’ve clicked.

Remember that the corners of the grid correspond to (0 0) and (1 1), so we still need to figure out which node we’ve clicked on. Since get-cursor-board-position returns a point on the Z plane, we want to quantize this point into the index ((x . y) pair) of the corresponding node. get-nearest-index does just this, while get-node is a utility function that retrieves the node from game-state that corresponds to one of these index pairs.

(define (get-nearest-index)

(let ((n (vround (v* (vclamp (get-cursor-board-position) 0 1)

(sub1 grid-rows)))))

(cons (inexact->exact (point-x n))

(inexact->exact (point-y n)))))

(define (get-node index)

(list-ref (game-state)

(+ (car index)

(* (cdr index)

grid-rows))))We now define a bit of logic that governs which turn (white or black) is taking place, how a stone is added to a node (signalling an exception when a stone already exists at the given node), how stones are added to the game scene and state, and finally the function that we call when the cursor is clicked: cursor-board-press, which places a stone at the index given by get-nearest-index.

(define turn (make-parameter 'black))

(define (next-turn)

(turn (if (eq? (turn) 'black)

'white

'black)))

(define (add-stone node color)

(when (node-color node)

(signal (make-property-condition 'game-logic 'occupied node)))

(node-color-set! node color))

(define (place-stone index)

(let ((color (turn))

(node (get-node index)))

(add-stone node color)

(add-stone-to-scene node index)

(next-turn)))

(define (cursor-board-press)

(place-stone (get-nearest-index)))Finally we define some mouse bindings. These are created in exactly the same way as key bindings, but we add an additional call in the init function – push-mouse-bindings – which adds these new bindings to the active mouse bindings stack.

(define mouse (make-bindings

`((left-click ,+mouse-button-left+

press: ,cursor-board-press))))

(define (init)

(push-key-bindings keys)

(push-mouse-bindings mouse)

(scene (make-scene))

(camera (make-camera #:perspective #:position (scene)

near: 0.001 angle: 35))

(set-camera-position! (camera) (make-point 0.5 0.5 2))

(init-board))We now have all the pieces in place to finish up our prototype. Running the code and clicking around a bit results in something that looks like this:

The actually game logic is barely in place at this point. Clicking on a node that already contains a stone will crash the game, there’s nothing preventing you from making other illegal moves, and there’s no way to capture opponents stones.

You can find the finished prototype here. I won’t go into details about the code that implements the game logic, since it is just pure Scheme. Getting all of the rules of Go implemented is not a trivial task, since there are many things that can render a move illegal, including duplicating a previous state of the game. Because of this, we use a transactional approach to updating the game state (made easy by parameters), rolling back to the previous state when a game-logic condition is signaled. The “liberties” (adjacent free nodes) of each like-coloured group of stones additionally needs to be tracked, making some additional game state necessary.

This game logic is all incidental to Hypergiant, of course. The point is that were able to get in the position to start adding all the real game logic fairly quickly: It took around a 150 lines of code to create something that looks like Go, and from there we were free to focus on making the game logic.4 When making a game that doesn’t already have a design set in stone, the ability to quickly get a small prototype working is even more valuable. By getting a prototype running quickly, you get more time to iterate on the actual game design.

The polish

A different angle

While we made a relatively complete game (insofar as all of the mechanics of Go are in place) in the last section, it’s not much to look at. Let’s see what we can do to make things a bit better looking.

You may have noticed that, rather than create an orthographic camera (as would be typical for a 2D game), the camera variable holds a perspective camera. This is because we’ll be turning this game of Go into 3D.

We’ll start by giving ourselves a camera angle that provides some perspective.

(define (init)

(gl:clear-color 0.8 0.8 0.8 1)

(push-key-bindings keys)

(push-mouse-bindings mouse)

(scene (make-scene))

(camera (make-camera #:perspective #:position (scene)

near: 0.001 angle: 35))

(set-camera-position! (camera) (make-point 1.6 -0.6 1.3))

(quaternion-rotate-z

(degrees->radians 45) (quaternion-rotate-x

(degrees->radians 45)

(camera-rotation (camera))))

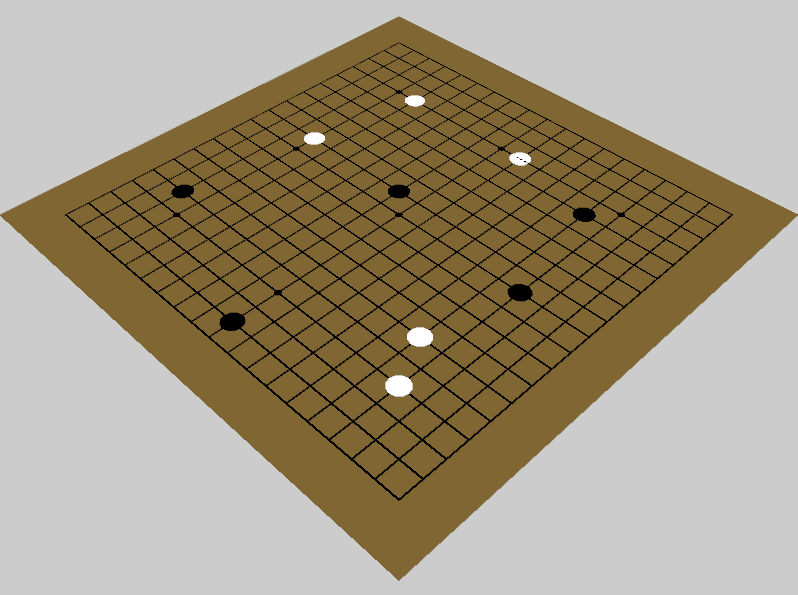

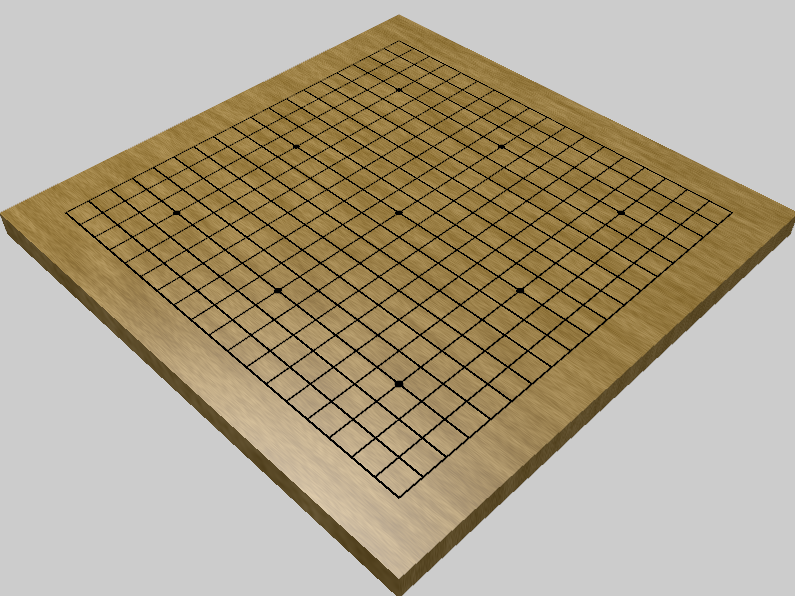

(init-board))We’ve once again updated our init function. First we added a call to gl:clear-color to make the background a bit more pleasing. Second, we modified the call to set-camera-position! to move the camera to the left, down, and back from where it used to be. This, combined with a rotation of the camera (done by taking the camera-rotation, rotating around the X-axis with quaternion-rotate-x then around the X-axis with quaternion-rotate-z), has given us what we see now:

If you look closely, you can see a bit of Z-fighting (objects behind another that are appearing in front of it) on the right-most white stone. When two objects are very close, sometimes they aren’t resolved properly. This can often be fixed by moving the near camera plane farther away, and indeed our near plane is very close. We’re not going to worry about this, though, since we’ll be changing the geometry in the next section and this problem will go away.

3D geometry

Using 2D meshes for a 3D game clearly looks pretty flat. Let’s add some depth to our models. This isn’t very hard – it just means we’ll be swapping the meshes that we’re using, with a bit of repositioning, as needed.

For the board, we’ll replace the rectangle with a cube (made with cube-mesh). Then we squish our cube into the shape we want using mesh-transform!:

(define board-mesh (cube-mesh 1))

(mesh-transform! 'position board-mesh

(3d-scaling 1.2 1.2 0.06))mesh-transform! takes the name of the vertex attribute we want to transform, a transform matrix (in this case a scaling matrix, scaling the width and length to 1.2, then reduce the height to 0.06), and a mesh. Since this is a destructive operation, our board mesh now has the dimensions that we passed into the transform matrix.

Note that we also got rid of the color attribute that was present in the rectangle mesh. We’re going to make the colour that had been specified on a per-vertex basis (a.k.a. an attribute) into a uniform (per-node data). This will make things simpler in a bit, and additionally makes our mesh take up less memory.

We’ll also need to reposition the mesh, modifying our init-board function:

(define brown (make-rgb-color 0.5 0.4 0.2 #t))

(define (init-board)

(add-node (scene) mesh-pipeline-render-pipeline

mesh: board-mesh

color: brown

position: (make-point 0.5 0.5 -0.03))

(add-node (scene) mesh-pipeline-render-pipeline

mesh: board-grid-mesh

color: black

position: (make-point 0 0 0.0003)))We’re moving the board -0.03 in the Z direction. Since it has a thickness of 0.06, the top face of the board is now aligned with the Z = 0 plane, as our rectangle mesh previously was. We’ve also changed the render-pipeline to the mesh-pipeline-render-pipeline that the grid is also using, and passed a colour that we just defined as the node’s color uniform value: brown. We defined brown with make-rgb-color with the #t passed as the fourth, optional value, which makes it so that the location of the colour in memory doesn’t move, which is important for uniform values. Once you pass a uniform value to OpenGL, it expects that object to stay in one place. This lets you later modify that colour value (still in-place) and the colour being rendered will change on the next frame.

We’ll now do roughly the same for the stones as we did for the board, replacing our circle with a squashed sphere:

(define stone-radius (/ 40))

(define stone-half-height 0.4)

(define stone-mesh (sphere-mesh stone-radius 16))

(mesh-transform! 'position stone-mesh

(3d-scaling 1 1 stone-half-height))

(define colors `((white . ,white)

(black . ,black)))

(define (add-stone-to-scene node)

(let* ((index (node-index node))

(n (add-node (scene) mesh-pipeline-render-pipeline

mesh: stone-mesh

color: (alist-ref (node-color node) colors)

position: (make-point (/ (car index)

(sub1 grid-rows))

(/ (cdr index)

(sub1 grid-rows))

(* stone-radius

stone-half-height))

radius: stone-radius)))

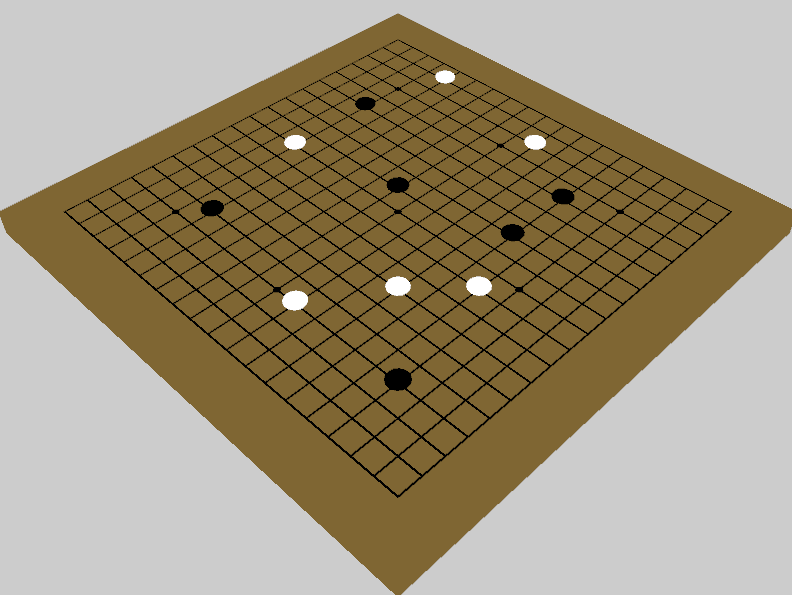

(node-scene-node-set! node n)))And the result looks like this:

… which doesn’t look too different from the previous screenshot. That’s because this scene is being rendered completely flat: every pixel of each mesh is given the same colour. If we want to give a better feeling of depth, we’ll need to add some shading, and shading is with light.

Custom pipelines

Before we get into lighting our scene, it’s worth going into more detail about one of the most complex aspects of Hypergiant: pipelines and their associated object render-pipelines. So far we’ve been passing predefined render-pipelines to add-node which lets the scene know how to render a mesh. Now we’re going to be creating our own pipelines.

We’ve been working with meshes, such as the ones that represent our board and the stones, and we’ve noted how they represent per-vertex data. We’ve also created nodes, which are added to a scene. Every node we’ve created has a mesh associated with it, and additionally has some data that affects how the mesh is rendered. The per-vertex data are called attributes and the data that is associated with a node that effects how or where meshes are drawn (such as the node’s position in the scene or the colour with which to render a mesh) are called uniforms. Pipelines are objects that use these two types of data to determine how the mesh is rendered.

Pipelines typically have two shaders5: a vertex and a fragment shader. Each shader is a program that is compiled and run on the GPU. The vertex shader accepts all of the per-vertex attribute data as well as some (but not necessarily all) uniforms. It is responsible for positioning each vertex on the window. The fragment shader accepts any input from the vertex shader, as well as uniforms, and is responsible for colouring every pixel within the geometry defined by the vertices (a rasterisation step happens automatically before the fragment shader). Since no vertex or pixel needs to know what’s happening to its neighbours for the results to be computed, the shader programs are free to run in parallel.

In Hypergiant, when you create a pipeline, a render-pipeline is also created. This is a composite object that combines the pipeline (which is essentially code that runs on the GPU) along with the (CPU-based) code that performs the calls that start the rendering process. It is these render-pipelines that should be passed to nodes, since they have all of the information necessary with which to render an object.

Lighting

Now that we know a bit more about pipelines, let’s define one! This section and the next will probably be overwhelming if you’ve never used OpenGL’s shader language before (GLSL).6 If you haven’t, you can still try to follow along – I’ve done my best to explain things from the fundamentals. Otherwise feel free to skip ahead, pausing to look at the pretty screenshots.

(define-pipeline phong-pipeline

((#:vertex input: ((position #:vec3) (normal #:vec3))

uniform: ((mvp #:mat4) (model #:mat4))

output: ((p #:vec3) (n #:vec3) (t #:vec2)))

(define (main) #:void

(set! gl:position (* mvp (vec4 position 1.0)))

(set! p (vec3 (* model (vec4 position 1))))

(set! n normal)))

((#:fragment input: ((n #:vec3) (p #:vec3))

use: (phong-lighting)

uniform: ((color #:vec3)

(inverse-transpose-model #:mat4)

(camera-position #:vec3)

(ambient #:vec3)

(n-lights #:int)

(light-positions (#:array #:vec3 8))

(light-colors (#:array #:vec3 8))

(light-intensities (#:array #:float 8))

(material #:vec4))

output: ((frag-color #:vec4)))

(define (main) #:void

(set! frag-color

(light (vec4 color 1) p n)))))This defines a new pipeline, phong-pipeline – so called because it is using Phong shading to implement the affect of lighting. This looks a bit complicated, so we’ll break it down into pieces. We can see that inside our definition we have two forms. Each of these forms represents a shader. The first shader is this:

((#:vertex input: ((position #:vec3) (normal #:vec3))

uniform: ((mvp #:mat4) (model #:mat4))

output: ((p #:vec3) (n #:vec3)))

(define (main) #:void

(set! gl:position (* mvp (vec4 position 1.0)))

(set! p (vec3 (* model (vec4 position 1))))

(set! n normal)))The first element inside this list specifies the properties of the shader. This is a vertex shader that takes two inputs – attributes – (position and normal), two uniforms (mvp and model), and outputs two vectors (p and n). The rest of this list makes up the shader code. In this case there is only one element: the main function. As you can see, this looks largely like Scheme, but there are some differences. For one, every definition needs a type (for the position attribute this is vec3, and for the main function the return type is void). Second, the meanings of any function calls aren’t necessarily going to be the same as their Scheme counterpart. For instance, * is used here to perform matrix multiplication – something that this function does not do in Scheme. Rather, this adheres to how multiplication is performed in the GLSL.

In our main function we are setting three values. The first is gl:position. This should be set by every vertex shader’s main function: it defines where a given vertex ends up on the screen. In this shader, our input vertex is called position, which is an attribute that all of our meshes define. We’re taking this input vertex, extending it to be a four element vector, and multiplying it my the uniform matrix mvp. mvp is a uniform that nodes automatically supply. It is the matrix that represents the node’s “model” matrix (it’s position in relation to the scene) times the “view” matrix (the position of the camera) times the “projection” matrix (the type of projection that the camera uses). The multiplication with the mvp matrix positions the vertex (as it relates to the mesh) onto the screen (as it relates to the scene). Most shaders will perform this same action, since you generally want your vertex to end up in a particular location!

The p and n vectors are output values, which means that they are being passed on for use in a later shader. We’re setting p to be the position of the vertex, in world coordinates (multiplying position by the model matrix, another automatically supplied uniform), and n to be the normal of the vertex.

((#:fragment input: ((n #:vec3) (p #:vec3))

use: (phong-lighting)

uniform: ((color #:vec3)

(inverse-transpose-model #:mat4)

(camera-position #:vec3)

(ambient #:vec3)

(n-lights #:int)

(light-positions (#:array #:vec3 8))

(light-colors (#:array #:vec3 8))

(light-intensities (#:array #:float 8))

(material #:vec4))

output: ((frag-color #:vec4)))

(define (main) #:void

(set! frag-color

(light (vec4 color 1) p n))))The fragment shader looks a bit more intimidating, but notice that the bulk of it is taken up by uniform definitions. If we gloss over those for now, we can see that again we have a main function, and in it we’re doing one thing: setting frag-color to the value returned by light. frag-color is specified as an output value, and fragment shaders expect only one, vec4, output value which it interprets as the RGBA pixel value for that fragment position.

Looking back to the shader’s properties, we see that it has two input values p and n which are set to the values taken from the vertex shader, as we might expect. Only, the fragment shader operates on every fragment (roughly, pixels) specified by the vertices, so these values will be interpolated between their per-vertex counterparts. Next we see something we didn’t in the vertex shader: a use value. This is importing the phong-lighting shader that Hypergiant defines. Looking at the documentation, we can see that this shader exports one function: light. This is the function that we’re using to compute the value of frag-color. It takes a surface-colour vector (which we are passing the uniform color to) a position vector (p) and a normal (n). Pretty straightforward, no?

However, if we look back at the uniforms that the shader is defining, we don’t see any of the uniforms except for color actually being used. If we examine phong-lighting’s documentation, we find that these other uniforms are required by this shader. Unfortunately, when you include a shader using use, you still need to list all of the uniforms that it requires in the shader that’s doing the including. Otherwise, Hypergiant won’t have the necessary information needed to compile the functions that render the shader.

So now that we’ve created our new pipeline, we need to modify our example in order to use it. You can think of pipelines as having a certain number of “slots”: its attributes and uniforms. Every node that uses that pipeline needs to fill all of those slots, and these slots are accessed by their name. So we need to make sure that the nodes that will use phong-pipeline supply the required attributes (meshes that have attributes named the same as the inputs to phong-pipeline) and uniforms (keyword arguments to add-node). We’ll be passing two sets of nodes to phong-pipeline for rendering: the board (just the rectangular bit) and the stones.

First we’ll look at what attributes are needed for phong-pipeline. We can see that it takes two: position and normal. position is always created by cube-mesh and sphere-mesh (and any of the SHAPE-mesh functions), but we need to modify these meshes so that they contain normals. Luckily, cube-mesh and sphere-mesh contain a keyword argument normals? that when set to #t will add normals to the mesh:

(define board-mesh (cube-mesh 1 normals?: #t))

...

(define stone-mesh (sphere-mesh stone-radius 16 normals?: #t))Now looking at the uniforms, we see that first we have color. We’re already passing color to our board and stone nodes, so this is taken care of. Next we see a long list of uniforms that we have not seen before: inverse-transpose-model, camera-position, ambient, n-lights, light-positions, light-colors, light-intensities, and material. The good news is that all of these, except for the last one, are automatically passed to nodes… after we initialize the lighting extension for a given scene. We’ll do this by once more editing our init function – and while we’re add it, we should also add a light to the scene:

(define (init)

...

(scene (make-scene))

(activate-extension (scene) (lighting))

(set-ambient-light! (scene) (make-rgb-color 0.4 0.4 0.4))

(add-light (scene) white 100 position: (make-point 0 0 2))

...)After we create our scene, we’re now calling activate-extension with it and the lighting extension. This makes it so that we can add lights to the scene, and all of those uniforms are automatically given meaningful values. We then set the ambient light level (with set-ambient-light!) to a medium grey. Finally we create a light (which is really just a special node), with a white colour and an intensity of 100, setting its position to (0 0 2), which is located directly above the left corner of the board. We could add more lights (with different colours, even), or play with the location of this light, but I learnt through experimentation that I liked how things look with one light in this off-centre location.

I had mentioned previously that all of the uniforms needed for lighting were automatically passed to a node, except for one. The remaining uniform is called material. It specifies the material property of the object with respect to how it behaves when lit. Specifically it defines the specular colour (the colour that it reflects) and exponent (the sharpness of the light’s reflection). We’ll define a single material to be used by both the board and the stones that reflects a moderate amount of white light.

(define shiny-material (make-material 0.5 0.5 0.5 10))The first three values of make-material represent the RGB values of the colour, while the last represents the exponent.

Finally we need to modify our calls to add-node so that they use this new pipeline.

(define (init-board)

(add-node (scene) phong-pipeline-render-pipeline

mesh: board-mesh

color: brown

material: shiny-material

position: (make-point 0.5 0.5 -0.03))

(add-node (scene) mesh-pipeline-render-pipeline

mesh: board-grid-mesh

color: black

position: (make-point 0 0 0.0003)))When we defined phong-pipeline, a render-pipeline named phong-pipeline-render-pipeline was also created. You’ll recall that this is the value that nodes are interested in, since they need to have all the information necessary to render a pipeline. The only other change we make is to pass shiny-material as the material uniform value. Then we’ll do the same for the stones:

(define (add-stone-to-scene node)

(let* ((index (node-index node))

(n (add-node (scene) phong-pipeline-render-pipeline

mesh: stone-mesh

color: (alist-ref (node-color node) colors)

material: shiny-material

position: (make-point (/ (car index)

(sub1 grid-rows))

(/ (cdr index)

(sub1 grid-rows))

(* stone-radius

stone-half-height))

radius: stone-radius)))

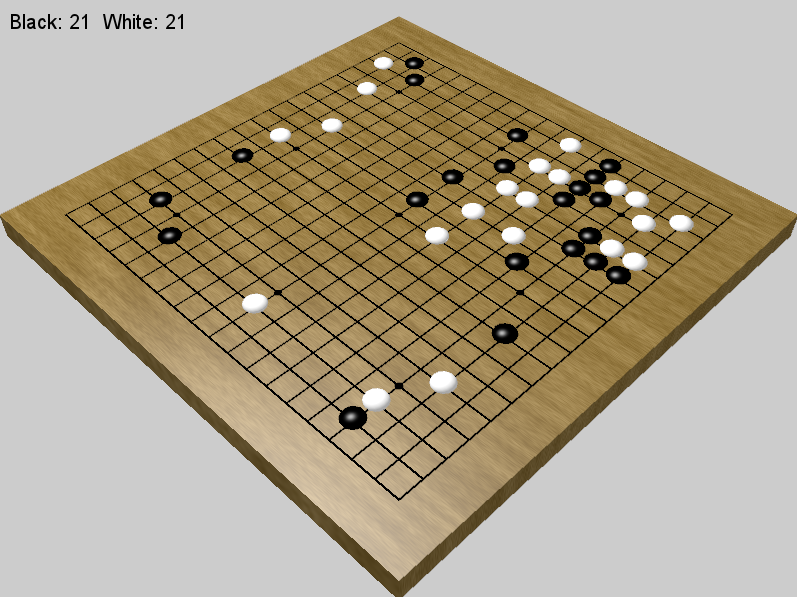

(node-scene-node-set! node n)))The result looks like this:

At this point in the tutorial we need to change the way our file is compiled. When you define shaders in Hypergiant, you must link to OpenGL while compiling. This means compiling with something like csc -lGL go.scm. You can still interpret the file in the same way, though.

Playing with noise

Our stones are looking pretty good. After all, in real life they are small, uniformly coloured, shiny objects. These are inherently an easy rendering task. Our board is a bit lacking, though. Go boards are typically made of wood, and right now this board could be generously described as looking like it’s made of plastic. Let’s do something to make it look more wood-like.

A typical solution would be to find a wood texture and apply it to the board. Since I enjoy procedural solutions, we’re not going to do that. Rather, we’ll define a new pipeline – one that implements a wood effect.

(define-pipeline wood-pipeline

((#:vertex input: ((position #:vec3) (normal #:vec3))

uniform: ((mvp #:mat4) (model #:mat4))

output: ((p #:vec3) (n #:vec3)))

(define (main) #:void

(set! gl:position (* mvp (vec4 position 1.0)))

(set! p (vec3 (* model (vec4 position 1))))

(set! n normal)))

((#:fragment input: ((p #:vec3) (n #:vec3))

uniform: ((color #:vec3)

(camera-position #:vec3)

(inverse-transpose-model #:mat4)

(ambient #:vec3)

(n-lights #:int)

(light-positions (#:array #:vec3 8))

(light-colors (#:array #:vec3 8))

(light-intensities (#:array #:float 8))

(material #:vec4))

use: (simplex-noise-3d phong-lighting)

output: ((frag-color #:vec4)))

(define (grain (resolution #:int)) #:vec4

(let ((n #:float (snoise (* p

(vec3 1 8 1)

resolution))))

(vec4 (+ color (* n 0.1)) 1)))

(define (main) #:void

(set! frag-color (light (* (grain 32) (grain 4)) p n)))))The vertex shader of this pipeline is identical to that of phong-pipeline. The input, uniforms, and output for the fragment shader are also the same as those for phong-pipeline. The fragment shader first differs in that it uses another shader: simplex-noise-3d. This shader is one of several noise shaders that Hypergiant provides. Our strategy for making this wood texture will be to take some simplex noise and stretch, layer, and colour it. We’ll use three dimensional noise so that we don’t need to worry about generating 2D coordinates: each fragment position will correspond to a position in the noise.

Looking at the documentation for simplex-noise-3d, we see that it exports two functions. We’ll only be using one of these functions: snoise. So far we’ve only seen shaders with one function in them (main). For this shader, we have two: grain and main. grain is a function of one argument: resolution, an integer. In grain, we are computing a 3D simplex noise value with snoise, based on the position of the fragment, stretched in the y direction (the position vector p is multiplied by the vector (1 8 1)), and then multiplied by resolution. This noise value (which falls between -1 and 1) is then scaled down and added to the color uniform. In main we calculated the final value of frag-color by multiplying together two values of grain at different resolutions, and passing that as the base colour to light.

The resulting shader renders as seen in the above image. Not to shabby for a few extra lines! I’d say that our board now has a feeling depth that it was previously lacking.

Keeping score

In the full code for the prototype, you may have noticed that there is a function that returns the current score (get-score), but nowhere do we use this function. This won’t do! What kind of game is it if we don’t know who’s winning?

To display the score, we’ll want to render a string to the screen. This is done much like any other sort of rendering. We create a mesh, and add it to a scene, making a new node. In the case of text, our mesh will be a series of rectangles that map to a texture that contains our font face. So first we need to load a font, which we’ll do with load-face. Next we create the mesh using the function string-mesh, which creates a mesh out of a string and a font face. Finally we add the mesh to a scene, although in this case we’re not going to use the scene we created, but a scene that Hypergiant provides by default: ui. ui is a scene that always renders last, with a fixed orthographic camera. For ui, (0 0) is the top left corner of the window, and positioning is done in pixels. Our resulting score string creation function looks like this:

(define font "/usr/share/fonts/truetype/msttcorefonts/arial.ttf")

(define score-face (make-parameter #f))

(define score-node (make-parameter #f))

(define score-mesh (make-parameter #f))

(define (init-score)

(score-face (load-face font 20))

(score-mesh (string-mesh "Black: 000 White: 000" (score-face)))

(score-node (add-node ui text-pipeline-render-pipeline

mesh: (score-mesh)

color: black

tex: (face-atlas (score-face))

position: (make-point 10 -10 0)

usage: #:dynamic))

(update-score))As you can see, load-face takes the path to a Truetype font (if you don’t have a font at that location, just change it to the location of a font that you do have), and a font size (in pixels). We then create a starting string with string-mesh. We’re being careful to create a mesh large enough to hold any score (Go scores are never in the thousands), since we’ll be updating this mesh in-place. Next, we add our mesh to the ui scene with the built in pipeline text-pipeline. This pipeline expects a color for the text, which we are setting to black. The pipeline also expects a texture that corresponds to the font face used by the mesh. We get this texture by calling face-atlas on our font face. After we position the mesh, we set the usage of the mesh to #:dynamic. This is done because we want to update the texture in-place. By default meshes are initialized (upon being added to a scene for the first time) as static, meaning their contents can’t be changed. By setting the usage to dynamic, we’re able to update the mesh when we want. Finally, we call a yet to be defined function – update-score.

We can then stick this function in init:

(define (init)

...

(init-score))And now we’ll create the function update-score. This function should modify our score mesh with the current score (which is fetched by the function get-score).

(define (update-score)

(let* ((s (get-score))

(black-score

(string-append "Black: "

(number->string (alist-ref 'black s))))

(white-score

(string-append "White: "

(number->string (alist-ref 'white s))))

(score (string-append black-score " " white-score)))

(update-string-mesh! (score-mesh) (score-node)

score (score-face))))Most of this function is just building the string which displays the score. The last line contains the function update-string-mesh!, which modifies our score mesh with the new string. This function also requires the score’s node and the font face we’re using. update-string-mesh! won’t work if our new string is larger (in terms of number of graphical characters) than the mesh we originally created with string-mesh.

Finally, we’ll edit cursor-board-press so that every time a the board is clicked, the score will be updated.

(define (cursor-board-press)

(place-stone (get-nearest-index))

(update-score))Now we can see the score!

Here we have our board, complete with scoring. I think you’ll agree that this has come a long way from our prototype. While this does not have all of the features you’d expect from a finished game – saving, options, perhaps online play or AI – we’re going to stop here as it looks reasonably complete at this point.7

Wrap up

I hope this tutorial has given you an idea of some of the things you can do with Hypergiant. You can see the finished code for the game of Go in Hypergiant’s examples directory along with some of other examples.

The basic elements that you should have taken away from this tutorial are not numerous: scenes, cameras, nodes, meshes, and render-pipelines are the basic building blocks that Hypergiant uses to create graphics, and these can take you quite far. While I tried to cover these fundamentals, this tutorial didn’t really get into any extension beyond creating some simple pipelines. You’re not limited to that, however. For instance:

- Meshes are quite flexible, allowing for any sort of per-vertex data

- Shaders can get a lot more complex

- Nodes don’t need to be passed meshes or even render-pipelines: they’ll take any arbitrary data and a function that knows what to do with it

- Hyperscene can be readily extended in C

So really, there are no limits placed on what ends up on the screen. And there are some parts of Hypergiant that this tutorial didn’t even touch on, such as animated sprites, image and model loading, and more advanced use of scenes. So dive into the documentation! There’s lots to explore. If you’re ever wondering what a particular function does, don’t forget to take advantage of Chickadee. Finally, if you have any questions or comments, feel free to email me or chat with me (alexcharlton) on #chicken at freenode.net.

Appendices

Appendix A: Why CHICKEN Scheme

There are many game libraries, frameworks, and engines out there, but most of these are written in C++. I’m not alone in thinking that C++ sucks much of the fun out of programming. For me, no language matches the freedom of expression and consequentially productivity of Lisp.8 Scheme has become my favourite Lisp to work with, combining a small, simple core with an advanced macro system. It is well defined, with an active, evolving standard. If you’re learning to program, Scheme also has some of the highest quality learning materials available for any language.9

Scheme has many implementations that vary widely in quality, but CHICKEN Scheme has become my favourite. It implements the 5th and 7th revisions of the Scheme standard (skipping over 6, which diverged a good deal from all other revisions). CHICKEN has a great community, a good set of libraries (probably the largest collection for any Scheme), and an efficient implementation. CHICKEN is a Scheme to C compiler, so it creates executable that are native to the system that they were compiled on, and has tools for easy deployment. Furthermore, the bond between CHICKEN and C makes accessing C functions super easy.

Hypergiant could not have been made as it is in any language or implementation without strong meta-programming facilities that compiles to C. This combination of features makes Hypergiant’s unique rendering system, where functions for rendering every shader are automatically compiled to C, possible.

While most game engines take the opposite approach to their design – creating a C++ core and layering a scripting language on it – I believe that Hypergiant is a compelling argument that things are better the other way around: working in a high-level language and using a low-level language when speed is needed. This makes it so that the engine (library in this case) is more concise and easier to understand and hack, while sacrificing very little in return, as evidenced by Hypergiant’s pure-C rendering system. Additionally, this removes the artificial barrier between the scripting and host language, putting more power in the hands of game creators.

Appendix B: Installing CHICKEN and Hypergiant

Hypergiant depends on three external libraries:

OpenGL should already be installed on your computer, but the later two you’ll probably need to install on your own.

When installing GLFW on OS X through Homebrew, an extra step is needed. Homebrew renames the library’s from the default. You can fix this by creating a link that points to the library that gets installed. E.g. sudo ln -s <homebrew-lib-dir>/glfw3.dylib /usr/local/lib/glfw.dylib

If you already have CHICKEN on your computer, then installing Hypergiant is as simple as chicken-install hypergiant. If not, though, read on.

First we need to install CHICKEN. CHICKEN depends on a having a working C compiler in your path as well as make. For Linux these is are likely already installed. For Windows mingw is recommended. For OS X, you need to install Xcode.

You can download CHICKEN here. After extracting it, you’ll need to compile it. This is done with make. Rather than install in a location where you need root privileges, it’s recommended that you install it locally. You can set the install location with make PREFIX=<destination>. You also need to pass the platform that you’re on to make. So the full call looks like this:

make PLATFORM=<platform> PREFIX=<destination>For example, I do this:

make PLATFORM=linux PREFIX=/home/alex/builds/chickenType make without any arguments to see a list of platforms.

Once this completes, finish off the installation with:

make PLATFORM=<platform> PREFIX=<destination> installIf you’ve installed to a location that isn’t already in your PATH, add <destination>/bin. Then you can run chicken-install hypergiant to get started!

If you have any further questions about installing CHICKEN, take a look at its README.

Appendix C: OpenGL resources

Hypergiant depends on features that were first added to OpenGL’s core in version 2.0, over ten years ago in 2004. These features were made mandatory five years later in version 3.1. While you’ll be hard-pressed to find someone using a version of OpenGL this old, this is still often referred to as “modern” OpenGL. This is because, in moving from version 1 to 2, OpenGL introduced a large paradigm shift, moving from a fixed pipeline to a programmable pipeline. This allowed users to created their own programs (shaders) that run on the GPU, giving the user far more freedom.

That said, many of the resources that have been written for OpenGL only apply to the older “legacy” API, so you need to be careful to select something appropriately new.

Here are some resources to get you started:

- An intro to modern OpenGL: An online tutorial that does a good job of explaining the basic concepts

- Learning Modern 3D Graphics Programming: A well written online book, that suffers a bit from the example code files dependant on an obscure make system

- OpenGL SuperBible – A (non-free) book, now in its 6th edition, the SuperBible aims to provide comprehensive coverage into the most up-to-date features of OpenGL

- Song Ho’s OpenGL tutorials: Excellent in-depth looks at many of OpenGL’s fundamental concepts

- Modern OpenGL tutorials: Another tutorial that covers a wide range of topics

While all of these resources are written in C or C++, the conversion to CHICKEN code is fairly straightforward. All OpenGL functions and constants are exported by the opengl-glew egg with minor renaming to make names more Scheme-like. These symbols are all reexported by Hypergiant, with the prefix gl:.

Really, Hypergiant is a collection of libraries, but I’ll be talking about it as a whole. Check out the documentation to see what goes into Hypergiant.↩

See appendix C↩

I highly recommend Gregor Kiczales’ MOOC Introduction to Systematic Program Design (requires a Coursera account to view the content) as a starting point for new programmers, as it does a great job of teaching how to programs are designed and not just the mechanics of programming. The Little Schemer is another well-regarded starting point, which focuses primarily teaching recursion. Structure and Interpretation of Computer Programs is a more intensive introduction to programming (available for free online). Finally, for a more conventional, practical introduction, you could try Teach yourself Scheme in Fixnum Days↩

Which weighs in at around 250 LOC↩

They may have more – such as a geometry or a tessellation shader – but they must contain at minimum a vertex and a fragment shader.↩

All of the resources featured in appendix C provide introductions to shaders. While all of the GLSL shader creation happens automatically in Hypergiant, porting shader code from the GLSL to Hypergiant is fairly easy. Check out the glls documentation for details.↩

Of course, the first 90% of a game’s code accounts for 90% of development time and last 10% of a game’s code accounts for the other 90% of development time.↩

That said, I’d take C over C++ any day. In fact, a good chunk of Hypergiant is written in C. Small languages like C and Scheme, based on a few fundamentals, make reasoning about programs much easier. And to me, being able to reason about a program makes everything else easier, too. If you’re working in a high-level language, if you can easily see why something is slow, you can easily speed it up. The more convoluted the language, the harder things can be to trace. And in both high and low-level languages, simplicity makes finding bugs easier.↩

I highly recommend Gregor Kiczales’ MOOC Introduction to Systematic Program Design (requires a Coursera account to view the content) as a starting point for new programmers, as it does a great job of teaching how to programs are designed and not just the mechanics of programming. The Little Schemer is another well-regarded starting point, which focuses primarily teaching recursion. Structure and Interpretation of Computer Programs is a more intensive introduction to programming (available for free online). Finally, for a more conventional, practical introduction, you could try Teach yourself Scheme in Fixnum Days↩